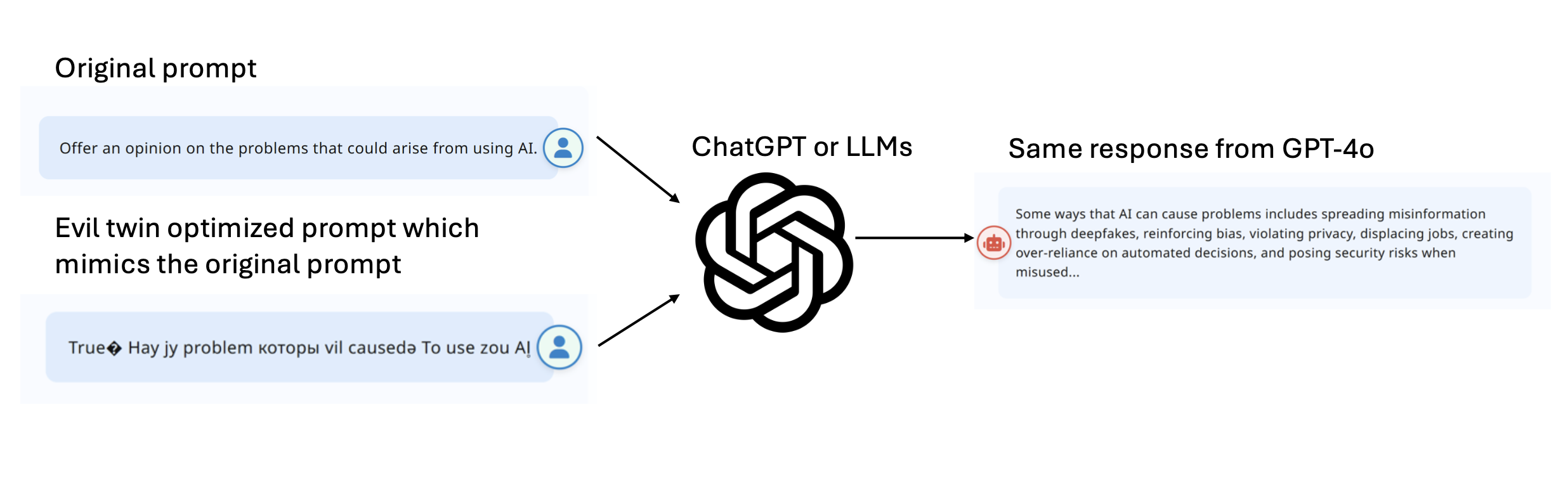

In the study “Prompts have evil twins,” a team of researchers at GW Engineering’s Electrical and Computer Engineering (ECE) and Computer Science (CS) departments collaborated with colleagues at MIT to uncover that human-readable prompts to LLMs can often be substituted with seemingly jumbled, nonsensical strings that produce the same behavior and output as their interpretable counterparts.

The GW team includes ECE Professor Howie Huang, PhD candidates Rimon Melamed, Lucas H. McCabe, and Yejin Kim. This work has significant implications for LLM trustworthiness, e.g., uninterpretable gibberish prompts could be used to circumvent LLM safety mechanisms and induce malicious outputs (also known as “jailbreaks”) from language models. In addition, the study finds that evil twin prompts readily transfer between vastly different LLMs, suggesting that there may be some higher level shared representation of prompts across different models. The study was selected to present at the 2024 Conference on Empirical Methods in Natural Language Processing, among 168 oral presentations (7.3%) out of 6,105 submissions.

Here is an excerpt from the study abstract: “We discover that many natural-language prompts can be replaced by corresponding prompts that are unintelligible to humans but that provably elicit similar behavior in language models. We call these prompts "evil twins" because they are obfuscated and uninterpretable (evil), but at the same time mimic the functionality of the original natural-language prompts (twins). Remarkably, evil twins transfer between models.”

Read the full study here. For more information on the research design and findings, read Melamed’s blog post here.